Whilst many people will tonight attempt to find their universe at the bottom of a glass, it appears that condensed matter scientists will be attempting to find it at the bottom of a test-tube.

Whilst many people will tonight attempt to find their universe at the bottom of a glass, it appears that condensed matter scientists will be attempting to find it at the bottom of a test-tube. Making a late entry into the 'most ludicrous claim made a scientist during the year' competition, is Richard Haley, who claims that, from an analogy between the theoretical representation of superfluid helium-3 and a certain way of theoretically representing space-time, by obtaining a superfluid state of helium-3 in a test-tube, "in effect, we have made a universe in a test tube."

Helium-3 in a superfluid state is a Bose-Einstein condensate. The significance of this is that the helium-3 nuclei are fermions, whereas Bose-Einstein condensates can only be formed by collections of bosons. To form a superfluid state, it is necessary, at very low temperatures, for pairs of helium-3 nuclei to become correlated, in a manner analogous to Cooper pairs of electrons in superconductivity. Each such pair of helium-3 nuclei form a boson, thereby enabling the formation of a Bose-Einstein condensate.

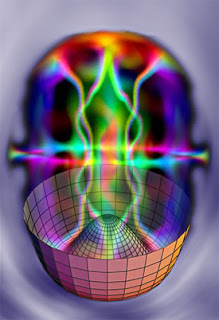

Now, the metric tensor used to represent space-time can, reputedly, be built from two copies of a fermionic coframe field. Hence, there is an analogy between this and a bosonic condensate built from Cooper pairs of helium-3 atoms.

"Consequently," claims Haley, "the superfluid can be used to simulate particle and cosmic phenomena; black holes, cosmic strings and the Big Bang for instance."

I don't know enough about condensed matter physics to assess this claim. However, I do know that aerodynamicists working with scale-models in wind-tunnels often find it extremely difficult to reliably infer conclusions that apply on full length scales. And in this case, one has more than merely a formal analogy, one is working with exactly the same medium, (namely airflow over an 3-dimensional object), about which one is attempting to infer conclusions.