If you take the statements about entropy in almost every elementary textbook, and indeed most advanced ones, they are contradicted when the gravitational field is turned on and is significant. For example, in the famous case of the gas container split into two halves by a barrier, with all the gas initially on one side, the standard statement is that the gas then spreads out to uniformly fill the whole container when the barrier is removed, with the entropy correspondingly increasing. But when gravitation is turned on, the final state is with all the matter clumped in a blob somewhere in the container, rather than being uniformly spread out...The question then is whether there is a definition of entropy for the gravitational field itself (as distinct from the matter filling space-time), and if so if the second law of thermodynamics applies to the system when this gravitational entropy is taken into account

If you take the statements about entropy in almost every elementary textbook, and indeed most advanced ones, they are contradicted when the gravitational field is turned on and is significant. For example, in the famous case of the gas container split into two halves by a barrier, with all the gas initially on one side, the standard statement is that the gas then spreads out to uniformly fill the whole container when the barrier is removed, with the entropy correspondingly increasing. But when gravitation is turned on, the final state is with all the matter clumped in a blob somewhere in the container, rather than being uniformly spread out...The question then is whether there is a definition of entropy for the gravitational field itself (as distinct from the matter filling space-time), and if so if the second law of thermodynamics applies to the system when this gravitational entropy is taken into account

...This issue remains one of the most significant unsolved problems in classical gravitational theory, for as explained above, even though this is not usually made explicit, it underlies the spontaneous formation of structure in the universe - the ability of the universe to act as a 'self-organizing' system where ever more complex structures evolve by natural processes, starting off with structure formed by the action of the gravitational field. (George Ellis,

Cosmology and local physics).

As cosmologist George Ellis notes, for systems in which gravity plays no role, the states of maximum entropy are indeed the states in which the parts of the system are uniformly distributed in space. By virtue of the fact that gravity transforms initially uniform distributions of gas into localised agglomerations, such as stars, it is often concluded, perhaps complacently, that gravitational processes are capable of lowering the entropy of matter. As the quote from Ellis demonstrates, this thought is then often conjoined with the proposal that the second law of thermodynamics can only be preserved by taking into account the entropy of the gravitational field associated with such concentrations of matter.

However, Oxford philosopher of physics David Wallace has brilliantly dispelled misconceptions such as these in his recent paper,

Gravity, entropy and cosmology: in search of clarity, and we shall attempt here to provide, appropriately enough, a condensed version of Wallace's exposition.

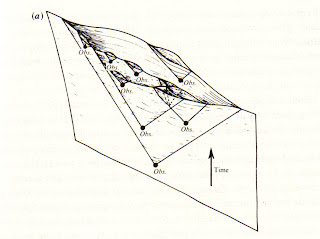

Begin by recalling that entropy is a property possessed by the states of physical systems. Classical physics conceives each physical system to possess a huge, multi-dimensional space of possible states, called the

phase space of the system. The phase space is partitioned into regions called

macrostates, consisting of states which share macroscopically indistinguishable properties. If there is any ambiguity, the exact states, (the points of the phase space), are then referred to as the

microstates.

The entropy of a state is then defined to be a measure of the size of the macrostate volume in phase space to which that state belongs. Thus, the entropy of a state is a measure of how typical that state is within the entire space of possible states. The remorseless increase of entropy, enshrined in the second law of thermodynamics, is simply a reflection of the fact that the state of a

closed system will, most probably, move into the regions of phase space which possess the greatest volume. (In this context, a closed system is a system in which there are no flows of matter or energy into or out of the system).

Turning to the question of gravitational contraction, it is vital at the outset to correct the following potential misconception. One might assume that, given a small initial perturbation in an otherwise uniform distribution of interstellar gas, a small region in which the density of matter becomes higher than the average, the gravity of that region will then attract more matter from the surroundings, and a positive feedback process will then ensue. One might think that as the excess density of matter increases, a greater force is exerted on the surrounding matter, thus increasing the agglomeration yet further, in a looped process, until eventually a star is formed. In fact, most interstellar gas is extremely reluctant to contract, simply due to its thermal pressure, which balances any attractive gravitational force.

This, however, is not because the contraction of a system necessarily reduces its entropy. As Wallace emphasises, whilst the contraction of a system to a smaller volume of space has an entropy-decreasing effect, the contraction will also raise the temperature of the system, and this has an entropy-increasing effect. One has to do the sums to work out whether the net effect is to increase or decrease the entropy.

It transpires that if the initial total energy of a system is positive (i.e., if the sum of the gravitational potential energy U and the kinetic energy K, is greater than zero, E = U + K > 0), then the entropy is maximised by the expansion of the system. Such a system is such that the typical velocity of the constituent particles exceeds the gravitational escape velocity of the system, hence the expansion of the system is easy to understand from this perspective. If, however, E = U + K < 0, then the system is said to be gravitationally bound, and in this case it is actually contraction which maximises the entropy. This, then, immediately demonstrates the complacency of the assumption that gravitational contraction is an entropy-lowering process.

Nevertheless, such contraction will only take a system to at most half of its initial radius, and fails to explain the formation of stars from interstellar gas clouds. Instead, the formation of stars is dependant upon the existence of a mechanism for removing heat (and therefore energy) from the contracting system.

Suppose the initial state is one in which thermal pressure balances the gravitational attraction, but suppose that there is then some heat flow out of the system. The kinetic energy of the system K, and therefore its thermal pressure, will reduce, as will the total energy E. As a result of the reduced thermal pressure, the system will contract, reducing the gravitational potential energy U of the system, and converting it into a kinetic energy K which is greater than the initial kinetic energy. Thus, the removal of heat from such a gravitationally bound system will actually increase its temperature. (Such a system is said to possess a negative heat capacity). This, in turn, will create a greater temperature gradient between the system and its surroundings, leading to more heat flow out of the system, and to further contraction. This process will continue until the pressure becomes sufficiently great at the centre of the contracting mass that nuclear fusion is triggered. As long as the heat produced by nuclear fusion is able to balance the heat flow out of the system, the thermal pressure will balance the gravitational force, and the contraction will cease.

Whilst this process successfully explains star formation, it is a mechanism which requires heat flow out of the system, and this is an entropy-decreasing effect. Given that the reduction in spatial volume also constitutes an entropy-decreasing effect, it can be safely concluded that the entropy of the matter in a gravitationally contracting system decreases. However, contrary to the suggestions made by Ellis and others, the entropy of the gravitational field does not need to be invoked and defined in order to reconcile this fact with the second law of thermodynamics.

As a gravitationally-bound system contracts, the frequency of the collisions between the constituent particles increases, and a certain fraction of those interactions will be so-called

inelastic collisions, in which the atoms or molecules are raised into excited energy states. Those excited states decay via the emission of photons, and this electromagnetic radiation is then lost to the surroundings. It is this radiative emission which is the most effective means by which heat is transferred from the contracting body to its lower temperature surroundings. And crucially, the entropy of this radiation is sufficiently huge that it easily compensates, and then some, for the lower entropy of the contracting matter. The total entropy of a contracting gravitational system therefore increases, as long as one counts the contribution from the electromagnetic radiation.

It would be easy to think that the existence of night is solely a consequence of the rotation of the Earth and its location relative to the Sun. But it is not. It is a consequence of the expansion of the Universe. If the Universe were not expanding then, wherever we looked into space, our line of sight would end at a star. The result would be like looking into a forest of trees. In a universe that didn't expand, the whole sky would resemble the surface of a star; we would be illuminated by perpetual starlight. What saves us from this everlasting light is the expansion of the Universe. It degrades the intensity of the light from distant stars and galaxies, and it leaves the night sky dark. (John Barrow, The Artful Universe, p45.)

It would be easy to think that the existence of night is solely a consequence of the rotation of the Earth and its location relative to the Sun. But it is not. It is a consequence of the expansion of the Universe. If the Universe were not expanding then, wherever we looked into space, our line of sight would end at a star. The result would be like looking into a forest of trees. In a universe that didn't expand, the whole sky would resemble the surface of a star; we would be illuminated by perpetual starlight. What saves us from this everlasting light is the expansion of the Universe. It degrades the intensity of the light from distant stars and galaxies, and it leaves the night sky dark. (John Barrow, The Artful Universe, p45.) As the Sun sets in Singapore, and European visitors fight the disruption to their circadian cycles, the aesthetics of Formula 1 undergoes a phase transition. A silver strip of metal halide light runs between the colonial palm trees, beneath the bejewelled post-modernist towers, underneath the concrete stanchions of the flyovers, alongside the armadillo-contoured concert hall and theatre, and beside the neoclassical and Palladian civic architecture. Specular reflections shimmer from the compound surfaces of the cars; the Ferraris become molten lava, and the McLarens dissolve into liquid metal.

As the Sun sets in Singapore, and European visitors fight the disruption to their circadian cycles, the aesthetics of Formula 1 undergoes a phase transition. A silver strip of metal halide light runs between the colonial palm trees, beneath the bejewelled post-modernist towers, underneath the concrete stanchions of the flyovers, alongside the armadillo-contoured concert hall and theatre, and beside the neoclassical and Palladian civic architecture. Specular reflections shimmer from the compound surfaces of the cars; the Ferraris become molten lava, and the McLarens dissolve into liquid metal.