Yesterday I highlighted the inadequacies and assumptions in the scenarios which form the input for Global Climate Models (GCMs). Today, I'd like to pinpoint the inadequacies of the GCMs themselves. The key point to appreciate about most computer simulations in science is that they rarely simulate all the relevant processes involved in the system under study. For all but the simplest of systems, it is necessary to parameterise various things. The behaviour and effect of parameterised processes and objects is not simulated, merely set by hand. The parameters represent the average or approximate effect of the things which cannot be simulated.

Simulation methodology requires that one validates a simulation by testing that it is capable of reproducing known data as output. In the case of a GCM, validation requires the simulation to reproduce the known global temperature profile up to the present day for the known profile of CO2 and SO2 emissions. However, there is a methodological problem here which besets many scientific simulations: the setting of the simulation parameters (a process called calibration) has to occur at the same time as validation. One adjusts the values of the parameters until the simulation reproduces the desired output. There is an obvious problem here: if the underlying logic of the model is incorrect, that fact can be concealed during calibration by adjusting the simulation parameters until the combination of parameter values compensates for the inaccuracy of the underlying model, and the desired output is obtained. To reduce the probability of this, one needs to calibrate a simulation against a number of different scenarios; one needs to obtain a fixed combination of parameter values which are such that the simulation reproduces actual data across a range of different scenarios. It is less likely that a specific choice of parameter values could compensate for inadequacies in the simulation model across a range of different scenarios.

However, for GCMs, there is a big problem here: there is only one set of data to validate and calibrate against; GCMs can only be calibrated and validated against 20th century climate data. This means that GCMs cannot be properly calibrated and validated until the parameterised processes are de-parameterised, and properly simulated. In the case of GCMs, the parameterised processes are typically things like cloud cover formation, which have a significant effect on global temperature, but which lie below the resolution level of the simulations. Sure enough, the various GCMs used by the various climatological research institutions around the world produce a large range of different predictions for the global temperature profile in the 21st century.

There is an even more severe problem with the validation and calibration of GCMs against 20th century climate data: not one of the models is capable of accurately reproducing the temperature profile throughout the 20th century. The 20th century basically divides into three phases from a global climate point of view: there was a period of rapid warming, but negligible CO2 emissions between 1900 and 1940; a period of global cooling from 1940 until 1975 despite significant increases in CO2 emissions; and a second period of significant warming from 1975 until the end of the century, in concert with a significant increase in CO2 emissions. The GCMs are capable of reproducing the temperature profile from 1975 onwards, but they tend to struggle with the temperature behaviour prior to that. One can postulate that the cooling from 1940 to 1975 occurred because several volcanic eruptions pumped SO2 into the atmosphere, and this had a cooling effect. However, the rapid temperature increase in the first part of the century, without a corresponding increase in CO2 emissions, is difficult for the GCMs to explain. Perhaps the best explanation is that patterns of solar radiation, such as sunspot cycles, were responsible for this temperature increase. If so, however, then one must acknowledge that current variations in global temperatures are a function of both solar radiation and CO2 emissions.

Tuesday, January 30, 2007

Monday, January 29, 2007

Global climate change and the IPCC

On Friday of this week, the Intergovernmental Panel on Climate Change (IPCC) releases the first part of its fourth report. Like the previous IPCC reports in 1990, 1996 and 2001, this document will attempt to assimilate and distill all the climate-change research done over the previous 5 years or so, and to produce a number of 'headline' statements intended to influence international political policy-makers.

Of particular interest is the predicted effect of CO2 emissions upon global temperatures by 2100. The IPCC obtains a predicted temperature range by taking the output from a number of different Global Climate Model (GCM) supercomputer simulations, conducted by various climate research institutions across the world. Whilst the 2001 report predicted a global temperature increase of 1.4-5.8°C by 2100, the New Scientist article suggests that the new predicted temperature increase will be 2.0-4.5°C. However, I am very interested to find out the specifics which underlie this prediction.

The range of the temperature uncertainty here comes both from the range of different GCMs, and the range of different emission scenarios which are input into the models. The global temperature profile over the 21st century which a GCM outputs, depends upon the 'scenarios' input into the simulation, and the fidelity of the simulation model itself. The future CO2 emission profile, (and that of the other greenhouse gases), and the sulphate emission profile, largely define a scenario. The simulation scenarios which obtained a temperature increase above 2.5°C in the 2001 IPCC report tended to assume (i) that CO2 emissions would rise by 1% each year, and that (ii) SO2 (sulphate) emissions would drop off significantly beyond 2030. See, for example, the SRES A2 scenario.

A number of critical points were made about these scenarios in Bjorn Lomborg's book, 'The Skeptical Environmentalist', and these same points raise questions about the 2007 report:

Sulphates are thought to have a cooling effect on the Earth, but because they are associated with pollution, it is assumed that legislation will remove this cooling effect beyond 2030. If it is now assumed in the 2007 scenarios that sulphate emissions will be reduced earlier, then the GCMs will predict higher temperature increases for this reason.

Actual CO2 emissions have been rising by about 0.4% throughout the 1980s and 1990s, so if this rate of increase were to continue, it follows that the 2001 IPCC scenarios greatly over-estimated the amount of global warming. However, I read now that CO2 emissions have increased by 3% over the past 5 years, which is approximately a 0.6% annual increase. This, perhaps, is a consequence of Chinese economic growth. I would like to know what CO2 increases have been assumed in the current IPCC report, and whether those assumptions can be justified by global economic projections.

The price of solar power is decreasing by 50% per decade, and, if this continues, solar power will be a competitive alternative to fossil fuels by 2030-2040. Whilst the IPCC scenario referred to as the A1T scenario does incorporate a transition to renewable energy sources circa 2040, the IPCC scenarios which generate the highest predicted temperature increases fail to take this into account.

It was noticeable in the 2001 IPCC reports that the effect of changing from one simulation model to another had a greater effect upon the projected temperature increase than the effect of changing the input scenario for a fixed simulation model. As Lomborg put it, the 'noise' created by the various simulations is greater than the strength of the signal. This casts significant doubt upon the reliability of the global climate models which underpin the IPCC reports.

The IPCC chairman, R.K. Pachauri says "I hope this report will shock people and governments into taking more serious action." My worry, however, is that the scientific understanding and data is being manipulated to meet this pre-conceived requirement. It may well be necessary to exaggerate the severity of anthropogenic global warming in order to galvanise governments into action, but the long-term consequence may be that the credibility of scientists becomes eroded in the public conscience.

Of particular interest is the predicted effect of CO2 emissions upon global temperatures by 2100. The IPCC obtains a predicted temperature range by taking the output from a number of different Global Climate Model (GCM) supercomputer simulations, conducted by various climate research institutions across the world. Whilst the 2001 report predicted a global temperature increase of 1.4-5.8°C by 2100, the New Scientist article suggests that the new predicted temperature increase will be 2.0-4.5°C. However, I am very interested to find out the specifics which underlie this prediction.

The range of the temperature uncertainty here comes both from the range of different GCMs, and the range of different emission scenarios which are input into the models. The global temperature profile over the 21st century which a GCM outputs, depends upon the 'scenarios' input into the simulation, and the fidelity of the simulation model itself. The future CO2 emission profile, (and that of the other greenhouse gases), and the sulphate emission profile, largely define a scenario. The simulation scenarios which obtained a temperature increase above 2.5°C in the 2001 IPCC report tended to assume (i) that CO2 emissions would rise by 1% each year, and that (ii) SO2 (sulphate) emissions would drop off significantly beyond 2030. See, for example, the SRES A2 scenario.

A number of critical points were made about these scenarios in Bjorn Lomborg's book, 'The Skeptical Environmentalist', and these same points raise questions about the 2007 report:

Sulphates are thought to have a cooling effect on the Earth, but because they are associated with pollution, it is assumed that legislation will remove this cooling effect beyond 2030. If it is now assumed in the 2007 scenarios that sulphate emissions will be reduced earlier, then the GCMs will predict higher temperature increases for this reason.

Actual CO2 emissions have been rising by about 0.4% throughout the 1980s and 1990s, so if this rate of increase were to continue, it follows that the 2001 IPCC scenarios greatly over-estimated the amount of global warming. However, I read now that CO2 emissions have increased by 3% over the past 5 years, which is approximately a 0.6% annual increase. This, perhaps, is a consequence of Chinese economic growth. I would like to know what CO2 increases have been assumed in the current IPCC report, and whether those assumptions can be justified by global economic projections.

The price of solar power is decreasing by 50% per decade, and, if this continues, solar power will be a competitive alternative to fossil fuels by 2030-2040. Whilst the IPCC scenario referred to as the A1T scenario does incorporate a transition to renewable energy sources circa 2040, the IPCC scenarios which generate the highest predicted temperature increases fail to take this into account.

It was noticeable in the 2001 IPCC reports that the effect of changing from one simulation model to another had a greater effect upon the projected temperature increase than the effect of changing the input scenario for a fixed simulation model. As Lomborg put it, the 'noise' created by the various simulations is greater than the strength of the signal. This casts significant doubt upon the reliability of the global climate models which underpin the IPCC reports.

The IPCC chairman, R.K. Pachauri says "I hope this report will shock people and governments into taking more serious action." My worry, however, is that the scientific understanding and data is being manipulated to meet this pre-conceived requirement. It may well be necessary to exaggerate the severity of anthropogenic global warming in order to galvanise governments into action, but the long-term consequence may be that the credibility of scientists becomes eroded in the public conscience.

Sunday, January 28, 2007

Quantum constructor theory

I'm off to Oxford this Thursday to see David Deutsch's talk on 'Quantum constructor theory', users.ox.ac.uk/~ppox/general/seminar.html (4:30pm, 10 Merton St, for those interested in popping along). Deutsch, of course, is the inventor of quantum computing, an advocate of the many-worlds interpretation of quantum theory, and a chap with some interesting thoughts on the relationship between physics, mathematics, and computation. Deutsch gives some hints about what quantum constructor theory might be in this interview at Edge.org (www.edge.org/3rd_culture/deutsch/deutsch_index.html):

What sorts of computations do physical processes correspond to; which of these 'computations' can be arranged with what resources? And which sorts can't be arranged at all? What little we know about this new subject consists of a few broad limitations such as the finiteness of the speed of light. The theory of computability and complexity theory give us more detail on the quantum side. But a big technological question in my field at the moment is, can useful quantum computers actually be built? The basic laws of physics seem to permit them. We can design them in theory. We know what physical operations they would have to perform. But there is still room for doubt about whether one can build them out of actual atoms and make them work in a useful way. Some people are still pessimistic about that, but either way, that debate is not really a scientific one at the moment, because there is no scientific theory about what can and can't be built. Similar questions are raised by the whole range of nanotechnology that has been proposed in principle. So that's where a quantum constructor theory is needed.

What sorts of computations do physical processes correspond to; which of these 'computations' can be arranged with what resources? And which sorts can't be arranged at all? What little we know about this new subject consists of a few broad limitations such as the finiteness of the speed of light. The theory of computability and complexity theory give us more detail on the quantum side. But a big technological question in my field at the moment is, can useful quantum computers actually be built? The basic laws of physics seem to permit them. We can design them in theory. We know what physical operations they would have to perform. But there is still room for doubt about whether one can build them out of actual atoms and make them work in a useful way. Some people are still pessimistic about that, but either way, that debate is not really a scientific one at the moment, because there is no scientific theory about what can and can't be built. Similar questions are raised by the whole range of nanotechnology that has been proposed in principle. So that's where a quantum constructor theory is needed.

Saturday, January 27, 2007

Hurrah! 'Top Gear' is back!

Probably the best TV programme in the world, 'Top Gear' returns on BBC2 this Sunday evening. Needless to say, the programme also marks the return of Richard Hammond, critically injured last September when the jet-powered car he was driving suffered a tyre failure at just below 300mph.

Whilst ostensibly a TV programme about cars, Top Gear metamorphed some years ago into a general entertainment and comedy show. In this respect, it approaches TV perfection. The mix of items is superb, switching from car-tests, to news features, to stunts, to the 'star-in-the-reasonably-priced-car', and to the latest competitive challenge posed to the co-presenters, Jeremy Clarkson, James May, and Richard Hammond. The chemistry of the co-presenters is perfect, and Clarkson's sardonic wit is fashionable again now that people have tired of gliberal politics. The quality of the filming and editing is fantastic, with clever and imaginative use of filters and lenses and location. As Clarkson pointed out in the following article,

Whilst ostensibly a TV programme about cars, Top Gear metamorphed some years ago into a general entertainment and comedy show. In this respect, it approaches TV perfection. The mix of items is superb, switching from car-tests, to news features, to stunts, to the 'star-in-the-reasonably-priced-car', and to the latest competitive challenge posed to the co-presenters, Jeremy Clarkson, James May, and Richard Hammond. The chemistry of the co-presenters is perfect, and Clarkson's sardonic wit is fashionable again now that people have tired of gliberal politics. The quality of the filming and editing is fantastic, with clever and imaginative use of filters and lenses and location. As Clarkson pointed out in the following article,

driving.timesonline.co.uk/article/0,,22749-2372412_1,00.html

"the average shooting ratio for a modern television programme is about 20 to one. In other words, you shoot 20 minutes of tape for every one minute that makes it to the screen. Top Gear works on a ratio of 250 to one. Top Gear, and I’m not bragging because this part has nothing to do with me, is probably the best-made programme in the world."

Whilst ostensibly a TV programme about cars, Top Gear metamorphed some years ago into a general entertainment and comedy show. In this respect, it approaches TV perfection. The mix of items is superb, switching from car-tests, to news features, to stunts, to the 'star-in-the-reasonably-priced-car', and to the latest competitive challenge posed to the co-presenters, Jeremy Clarkson, James May, and Richard Hammond. The chemistry of the co-presenters is perfect, and Clarkson's sardonic wit is fashionable again now that people have tired of gliberal politics. The quality of the filming and editing is fantastic, with clever and imaginative use of filters and lenses and location. As Clarkson pointed out in the following article,

Whilst ostensibly a TV programme about cars, Top Gear metamorphed some years ago into a general entertainment and comedy show. In this respect, it approaches TV perfection. The mix of items is superb, switching from car-tests, to news features, to stunts, to the 'star-in-the-reasonably-priced-car', and to the latest competitive challenge posed to the co-presenters, Jeremy Clarkson, James May, and Richard Hammond. The chemistry of the co-presenters is perfect, and Clarkson's sardonic wit is fashionable again now that people have tired of gliberal politics. The quality of the filming and editing is fantastic, with clever and imaginative use of filters and lenses and location. As Clarkson pointed out in the following article,driving.timesonline.co.uk/article/0,,22749-2372412_1,00.html

"the average shooting ratio for a modern television programme is about 20 to one. In other words, you shoot 20 minutes of tape for every one minute that makes it to the screen. Top Gear works on a ratio of 250 to one. Top Gear, and I’m not bragging because this part has nothing to do with me, is probably the best-made programme in the world."

Friday, January 26, 2007

Scalextric ingenuity

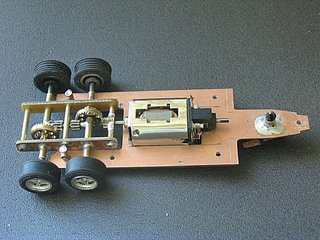

A few weeks ago, Autosport ran a nice piece on 'F1 cars that never raced', which included the Williams six-wheeler. Whilst the Tyrrell P34 of 1976 famously pioneered the idea of six wheels on a Formula 1 car, the Tyrrell employed four small wheels at the front, and, given that the rear wheels on a 1970s Formula 1 car were much bigger than the fronts, this failed to reduce the frontal area of the car. In contrast, the Williams six-wheelers of 1981 and 1982 had four driven wheels at the rear. This reduced frontal area, and, by virtue of splitting the torque amongst the contact patches of four tyres, rather than two, improved traction into the bargain.

A few weeks ago, Autosport ran a nice piece on 'F1 cars that never raced', which included the Williams six-wheeler. Whilst the Tyrrell P34 of 1976 famously pioneered the idea of six wheels on a Formula 1 car, the Tyrrell employed four small wheels at the front, and, given that the rear wheels on a 1970s Formula 1 car were much bigger than the fronts, this failed to reduce the frontal area of the car. In contrast, the Williams six-wheelers of 1981 and 1982 had four driven wheels at the rear. This reduced frontal area, and, by virtue of splitting the torque amongst the contact patches of four tyres, rather than two, improved traction into the bargain.The Williams FWo7s of 1979-1981 were, to my mind, the most beautiful of Formula 1 cars. They were simple, effective, and elegant. Scalextric duly brought out a model of the Fw07, one instance of which enjoyed many happy hours hurtling around my own 'figure-of-eight' track, clattering along the plastic armco, the aroma of over-heating electric motors diffusing through the air of the dining room.

By good fortune, I stumbled across this article on the internet today, in which an enthusiast describes how he converted a conventional Scalextric FW07 into a six-wheeled version. The casual ingenuity of this type of thing is fantastic:

p097.ezboard.com/fslotcarouthouse93633frm37.showMessage?topicID=15.topic

Thursday, January 25, 2007

The arrival of winter

From Sunday evening of this week, the weather forecasts predicted 'a widespread risk of snow and ice' in the South of England. The snow duly arrived on Tuesday night, but only in Sussex and Kent, where it normally seems to fall. At no stage did I hear or see a forecast which was willing to specify the counties in which the snowfall could be expected. This is exactly the type of information that commuters desire, and its absence is puzzling because the Met Office claims that its 3-day weather forecasts are now as accurate as their 1-day forecasts were 20 years ago. Perhaps the overall accuracy the Met Office claims for its forecasts is skewed by the accuracy of the forecasts issued under inherently predictable conditions, such as a 'blocking' high (pressure zone) during the summer or winter.

This week's New Scientist contains an article on the verification of weather forecast accuracy (27 January 2007, p32-35), which claims that forecasters at the Met Office receive an annual bonus determined by the verification scores for their forecasts. The article points out that forecasters can improve their scores by hedging, and by blurring the specifics of their forecasts. This perplexes me on two counts: firstly, that forecasters should be incentivized to make their forecasts less specific; and secondly, the very notion that the accuracy of a forecast is still dependent upon the forecaster producing it, seems to contradict the impression which the Met Office tries to convey about its prediction methodology. Atmospheric and oceanic data from weather stations and satellites are fed into meteorological models as initial conditions, and the Met Office supercomputers then predict the evolution of the meteorological parameters. In principle, making a forecast should then involve nothing more than reading off the predicted temperatures, pressures, cloudcover, and rainfall from these simulations, and translating them into a digestible package for the public. However, the very fact that forecasters receive bonuses based upon the accuracy of their forecasts suggests that there is actually still a large discretionary element to the production of a forecast. Perhaps one needs to have a good understanding of the idealisations and assumptions which have been incorporated into the atmospheric models if one is to judge the reliability of their predictions; there are a variety of atmospheric models, and perhaps the skill comes from understanding the circumstances in which each model is reliable or unreliable.

This week's New Scientist contains an article on the verification of weather forecast accuracy (27 January 2007, p32-35), which claims that forecasters at the Met Office receive an annual bonus determined by the verification scores for their forecasts. The article points out that forecasters can improve their scores by hedging, and by blurring the specifics of their forecasts. This perplexes me on two counts: firstly, that forecasters should be incentivized to make their forecasts less specific; and secondly, the very notion that the accuracy of a forecast is still dependent upon the forecaster producing it, seems to contradict the impression which the Met Office tries to convey about its prediction methodology. Atmospheric and oceanic data from weather stations and satellites are fed into meteorological models as initial conditions, and the Met Office supercomputers then predict the evolution of the meteorological parameters. In principle, making a forecast should then involve nothing more than reading off the predicted temperatures, pressures, cloudcover, and rainfall from these simulations, and translating them into a digestible package for the public. However, the very fact that forecasters receive bonuses based upon the accuracy of their forecasts suggests that there is actually still a large discretionary element to the production of a forecast. Perhaps one needs to have a good understanding of the idealisations and assumptions which have been incorporated into the atmospheric models if one is to judge the reliability of their predictions; there are a variety of atmospheric models, and perhaps the skill comes from understanding the circumstances in which each model is reliable or unreliable.

Tuesday, January 23, 2007

Companies are psychopaths

There's been something of a brouhaha in the UK this week over the scavenging of ship-wrecked goods from Branscombe beach. A cargo ship, the MSC Napoli, transporting approximately 2,400 containers, was deliberately grounded in Lyme Bay after suffering a 'structural failure' in last Thursday's storms. A number of the containers and their contents have been washed-up on the beach, and various goods, including BMW bikes, have been half-inched by happy middle-class beach-combers.

Whilst the authorities have accused such individuals of unmitigated greed, I think the action of the scavengers is largely justified. In radio phone-ins this morning, members of the public seemed to generally agree that it was wrong to take the property owned by individuals, but the property owned by companies was fair game. As one individual said, "companies screw us most of the time, so why shouldn't we screw them for once?" Quite.

This reminded me of the fact that companies, if treated as people, would be considered psychopathic. A company is totally selfish, has no conscience, feels no compulsion to speak the truth, and acts to destroy other companies. Those companies who pretend to be moral or environmental, try to promote these 'brand-values' as a calculated means to furthering the selfish interests of the company. Just recently, a delivery man from Amtrak broke my letterbox clean off. I've written to the local Amtrak depot manager, and even the chief executive, seeking some compensation. Needless to say, because Amtrak know that they can get away without doing anything, that's exactly what they do. People who work for companies often feel under no obligation to think morally, and consider that they are merely following 'company policy'. Rather like soldiers in wartime are merely 'following orders'.

In our current capitalist liberal democracy, government legislation should, in theory, act to constrain the power of companies, but it's a difficult balance to strike when the population desires economic growth. Is there an alternative political-economic structure to the 'companies plus government' structure? We know that a communist society, in which the 'means of production' are owned by the government, is a completely dysfunctional society, so this is not a viable alternative. Is there, however, a hybrid unit of structure, a combination of company and government, from which a better society could be built?

Whilst the authorities have accused such individuals of unmitigated greed, I think the action of the scavengers is largely justified. In radio phone-ins this morning, members of the public seemed to generally agree that it was wrong to take the property owned by individuals, but the property owned by companies was fair game. As one individual said, "companies screw us most of the time, so why shouldn't we screw them for once?" Quite.

This reminded me of the fact that companies, if treated as people, would be considered psychopathic. A company is totally selfish, has no conscience, feels no compulsion to speak the truth, and acts to destroy other companies. Those companies who pretend to be moral or environmental, try to promote these 'brand-values' as a calculated means to furthering the selfish interests of the company. Just recently, a delivery man from Amtrak broke my letterbox clean off. I've written to the local Amtrak depot manager, and even the chief executive, seeking some compensation. Needless to say, because Amtrak know that they can get away without doing anything, that's exactly what they do. People who work for companies often feel under no obligation to think morally, and consider that they are merely following 'company policy'. Rather like soldiers in wartime are merely 'following orders'.

In our current capitalist liberal democracy, government legislation should, in theory, act to constrain the power of companies, but it's a difficult balance to strike when the population desires economic growth. Is there an alternative political-economic structure to the 'companies plus government' structure? We know that a communist society, in which the 'means of production' are owned by the government, is a completely dysfunctional society, so this is not a viable alternative. Is there, however, a hybrid unit of structure, a combination of company and government, from which a better society could be built?

Monday, January 22, 2007

Encyclopedias and handbooks

For those who like their academic books large and compendious, a couple of interesting works have been published in the past year. Firstly, we have the 'Philosophy of physics' incarnation of the multi-volume 'Handbook of the philosophy of science'. This two-volume, 1522 page work is edited by Jeremy Butterfield and John Earman, published by Elsevier, and reasonably priced at £125.

Also of interest is the 'Encyclopedia of mathematical physics', edited by J.-P. Francoise, G. Naber & Tsou Sheung Tsun, and published by the Academic Press imprint of Elsevier. This five-volume work comprises 3500 pages, and will make an £855 hole in your bank account.

As usual these days, many of the articles are freely available as electronic pre-prints. Of particular interest are Martin Bojowald's account of Quantum Cosmology, arxiv.org/PS_cache/gr-qc/pdf/0603/0603110.pdf, (and note Bojowald's excellent choice of references!); George Ellis's 'Issues in the philosophy of cosmology', arxiv.org/PS_cache/astro-ph/pdf/0602/0602280.pdf; and NP Landsman's huge survey 'Between classical and quantum', philsci-archive.pitt.edu/archive/00002328/01/handbook.pdf.

Also of interest is the 'Encyclopedia of mathematical physics', edited by J.-P. Francoise, G. Naber & Tsou Sheung Tsun, and published by the Academic Press imprint of Elsevier. This five-volume work comprises 3500 pages, and will make an £855 hole in your bank account.

As usual these days, many of the articles are freely available as electronic pre-prints. Of particular interest are Martin Bojowald's account of Quantum Cosmology, arxiv.org/PS_cache/gr-qc/pdf/0603/0603110.pdf, (and note Bojowald's excellent choice of references!); George Ellis's 'Issues in the philosophy of cosmology', arxiv.org/PS_cache/astro-ph/pdf/0602/0602280.pdf; and NP Landsman's huge survey 'Between classical and quantum', philsci-archive.pitt.edu/archive/00002328/01/handbook.pdf.

Sunday, January 21, 2007

The Earth

I saw Eternity the other night,

Like a great ring of pure and endless light.

So said the poet Henry Vaughan, a short time, presumably, after ingesting some dubious herbal substance. Eternity, however, is rather passe, so I tried the other night to imagine the whole of the Earth as a unified active system:

Tectonic plates grinding rock together with geologic patience and continental force; a global geomorphological system of erosion, transportation and deposition; water percolating through soil and rock in dark, claustrophobic, subterranean solitude; hydrological networks of foaming mountain cataracts, and streams, and sedate, waltz-inspiring rivers, pumping water into the oceans; differential heating of the atmosphere generating pressure and temperature differences which dissipate via storms, depressions and jet streams; ocean currents, waves and tides; patterns of genes rippling amongst the biological species; cycles of agricultural land use; a vast network of villages, towns and cities, joined by road, rail, and river; a network of water supply and sewerage tunnels and pipes; an international network of oil and gas pipelines, suffuse with hydrocarbons; an electricity network of pylons, lines, cables, power stations and sub-stations, surging with potency; a telecommunications network of trunk lines, local exchanges, land lines, undersea cables and microwaves, linked to a constellation of satellites in orbit; a network of ports and shipping lanes across the oceans; a network of airports and airways puncturing the atmosphere; an economic system with prodigious flows of capital between accounts, assets, shares and derivatives; and an exponential system of information, memes and knowledge, supervening upon the genetic systems.

This is difficult to imagine, all at the same time.

Like a great ring of pure and endless light.

So said the poet Henry Vaughan, a short time, presumably, after ingesting some dubious herbal substance. Eternity, however, is rather passe, so I tried the other night to imagine the whole of the Earth as a unified active system:

Tectonic plates grinding rock together with geologic patience and continental force; a global geomorphological system of erosion, transportation and deposition; water percolating through soil and rock in dark, claustrophobic, subterranean solitude; hydrological networks of foaming mountain cataracts, and streams, and sedate, waltz-inspiring rivers, pumping water into the oceans; differential heating of the atmosphere generating pressure and temperature differences which dissipate via storms, depressions and jet streams; ocean currents, waves and tides; patterns of genes rippling amongst the biological species; cycles of agricultural land use; a vast network of villages, towns and cities, joined by road, rail, and river; a network of water supply and sewerage tunnels and pipes; an international network of oil and gas pipelines, suffuse with hydrocarbons; an electricity network of pylons, lines, cables, power stations and sub-stations, surging with potency; a telecommunications network of trunk lines, local exchanges, land lines, undersea cables and microwaves, linked to a constellation of satellites in orbit; a network of ports and shipping lanes across the oceans; a network of airports and airways puncturing the atmosphere; an economic system with prodigious flows of capital between accounts, assets, shares and derivatives; and an exponential system of information, memes and knowledge, supervening upon the genetic systems.

This is difficult to imagine, all at the same time.

Saturday, January 20, 2007

Lauren Laverne

The Culture Show returns tonight on BBC2, after a month's break, and Lauren Laverne will be presenting once more. Now, don't get me wrong, I like Lauren Laverne. She's very attractive, and she has one of those charming regional accents which, these days, functions as an instant passport to a job with the BBC. However, on this particular show I reckon she is, how shall I say, 'winging it'. Now, this is actually a subtle skill: whilst 'blagging it' involves a dexterous and extemporary use of words to conceal a lack of knowledge or truthfulness, winging it often requires one to judiciously remain silent, or to encourage other people to talk, in order to conceal a lack of knowledge or preparation.

The Culture Show returns tonight on BBC2, after a month's break, and Lauren Laverne will be presenting once more. Now, don't get me wrong, I like Lauren Laverne. She's very attractive, and she has one of those charming regional accents which, these days, functions as an instant passport to a job with the BBC. However, on this particular show I reckon she is, how shall I say, 'winging it'. Now, this is actually a subtle skill: whilst 'blagging it' involves a dexterous and extemporary use of words to conceal a lack of knowledge or truthfulness, winging it often requires one to judiciously remain silent, or to encourage other people to talk, in order to conceal a lack of knowledge or preparation.Lauren is on reasonably firm ground with, say, pop music or popular cinema, but when she's chatting to the bloke with the double-barrelled name about some installation or exhibition, I don't really sense a great depth of interest. I sense that Lauren is simply passing through, en route to Saturday night TV on BBC1. Whereas Verity Sharp, I feel, is very much at her final destination.

Friday, January 19, 2007

"It's not cinéma vérité, it's film noir!"

Not for the first time tonight, I pondered how I can get onto Newsnight Review on BBC2. One of the panellists this week was an elegant American writer and academic, Sarah Churchwell, (news.bbc.co.uk/1/hi/programmes/newsnight/review/3542918.stm) who, whilst discussing the new Paul Verhoeven film, 'Black Book', uttered the memorable rejoinder which forms the title of this blog. This is the type of woman I'd like to meet. We'd become instantly entwined in an intense and tumultuous relationship, a drama which would unfold upon the canvas of numerous open-air cafes in various European cities, our conversation laced with politics and philosophy and passion.

But first, I need to get onto Newsnight Review. One possible route is to become an NME journalist for 10 years or so, and then to migrate onto television as a 'pithy opinion dispenser'. Whilst I would enjoy ripping into Coldplay albums, this would be something of a long campaign. Another possible route is to become a modern poet, in the style of Tom Paulin or Ekow Eshun. I quite liked Mr Paulin's style of withering criticism ("I've never seen anything so completely abysmal, and awful, and reprehensible, and downright rotten in my entire life!"). I reckon I need to get myself to some of those literary parties.

Perhaps I should make a show, in Dave Gorman style, about my attempts to get onto Newsnight Review. If this show were to be reviewed on Newsnight Review, that would indeed be uber-ironic.

But first, I need to get onto Newsnight Review. One possible route is to become an NME journalist for 10 years or so, and then to migrate onto television as a 'pithy opinion dispenser'. Whilst I would enjoy ripping into Coldplay albums, this would be something of a long campaign. Another possible route is to become a modern poet, in the style of Tom Paulin or Ekow Eshun. I quite liked Mr Paulin's style of withering criticism ("I've never seen anything so completely abysmal, and awful, and reprehensible, and downright rotten in my entire life!"). I reckon I need to get myself to some of those literary parties.

Perhaps I should make a show, in Dave Gorman style, about my attempts to get onto Newsnight Review. If this show were to be reviewed on Newsnight Review, that would indeed be uber-ironic.

Monday, January 15, 2007

Ferrari F2007

This year's Ferrari F1 car, the F2007, was unveiled on Sunday. The most noteworthy aspect of it is the inclusion, for the first time on a Ferrari, of a 'zero-keel' front suspension.

This year's Ferrari F1 car, the F2007, was unveiled on Sunday. The most noteworthy aspect of it is the inclusion, for the first time on a Ferrari, of a 'zero-keel' front suspension.Back in 1990, Harvey Postlethwaite and Jean-Claude Migeot introduced the Tyrrell 019 (below), the first Formula 1 car to feature a 'raised nose'. The underbody of a F1 car generates a lot of downforce, and the greater the quantity of airflow under the car, the greater this downforce. The rationale of the raised nose is simply to maximise the airflow under the car by removing an obstruction, and the raised nose duly became the norm in F1 over the next decade. However, with the nose raised, the points where the lower front suspension wishbones previously attached to the car no longer existed. The universal solution adopted was to attach the lower wishbones to a single keel, hanging from beneath the raised nose.

Then, in 2001, Sergio Rinland's Sauber introduced the twin-keel solution, in which the lower wishbones on either side attached to their own keel, hanging from the outer underside of the nose. This opened up the central region, enhancing airflow off the front wing and underneath the car. The benefits, however, were not unambiguous; there were both structural limitations, and limitations placed upon the front suspension geometry. Ferrari resolutely stuck with a single keel, and their multiple championship success largely vindicated this decision. However, by 2005, McLaren had taken the twin-keel to its logical conclusion: a zero-keel, in which the lower wishbones are angled upwards, and attach to the lower flanks of the nose. McLaren enjoyed significant success in 2005, and really only lost the championship through poor reliability. However, they struggled in 2006, which begs the question, 'Why have Ferrari chosen to go the zero-keel route for 2007?'

Here is one possible answer. In 2006, engine capacity was reduced by the governing body from 3.0 litres to 2.4 litres. This made the reduction of drag more important, and the rear wing on an F1 car generates far more drag than the front wing. To reduce drag, the teams reduced the angle of incidence of the rear wings, but retained the same amount of downforce by increasing the level of flow to the rear wing. This was done by the clever use of 'flow conditioners' atop the nose, which can adjust the relative amount of airflow the front and rear wings are subject to.

Autosport journalist Mark Hughes reported before Christmas that the new Bridgestone tyres for 2007 are such that the rears suffer worse wear than the fronts, and the fronts are more difficult to warm up. As Hughes points out, this will force the designers to shift the weight distribution and centre of aerodynamic pressure forwards, taking load off the vulnerable rears, and helping to generate heat in the fronts. This change in the centre of aerodynamic pressure will make the front wing more important again, and perhaps justifies the use of a zero-keel.

Sunday, January 14, 2007

Fiat lux

I'm recording the thermal radiation from the monopole using a gamma-ray spectrometer, and using some graphical post-processing software to reconstruct an image of the created universe from the thermal radiation:

Look at that!....Me God, it's beautiful!

Hello,...what's this?

Oh shit.

Universe creation

Look at that!....Me God, it's beautiful!

Hello,...what's this?

Oh shit.

Universe creation

Saturday, January 13, 2007

Switching on now

Ok, this is it...lasers on...temperatures and pressures rising...now stabilising.

I'm focusing the electron beam onto the magnetic monopole now...jeez, look at the coruscations and scintillations...

Universe creation

I'm focusing the electron beam onto the magnetic monopole now...jeez, look at the coruscations and scintillations...

Universe creation

Creation event notification

Tonight's attempted universe creation event will occur at midnight UTC, and will be blogged live.

Tonight's attempted universe creation event will occur at midnight UTC, and will be blogged live.Universe creation

Creating a universe - Days 5 and 6

The Deus ex machina has never been to my taste, so I have chosen a theory of gravity which permits the existence of spiral galaxies without the need for dark matter (see previous post).

The Deus ex machina has never been to my taste, so I have chosen a theory of gravity which permits the existence of spiral galaxies without the need for dark matter (see previous post).I have also been working out the fiddly details of how my choice of the elementary particle physics determines the stable and bound systems which can exist on medium length-scales. By adding an extra gauge field, the 'Monster' force field, I have obtained a chemical periodic table subtley different from our own. This, in turn, has changed the structures of the cellular systems which can exist in my universe. Whilst oxidation and reduction are the driving mechanisms behind the geochemistry and living metabolisms in our own universe, my own universe employs a more sophisticated biological geochemistry. As in our own universe, however, I will allow evolution by natural selection to generate the actual living species and ecosystems.

Calibrating my particle accelerator to produce exactly the type of universe I want to produce has been a challenging exercise. I am not, after all, a miracle worker. However, I think I have solved most of the remaining, niggling problems.

I plan to create my universe at midnight UTC tonight, on-budget and on-schedule.

Universe creation

Friday, January 12, 2007

The Millennium Simulation, and maps of dark matter

General relativity is not the only possible classical theory of gravity. Extensions to general relativity have been proposed, such as 'Higher Derivative Gravity', and 'Scalar-Tensor' theories. (Brans-Dicke theory is one example of the latter). Is there any reason to believe that these other theories are physically relevant? Well, in fact, there are actually some serious discrepancies between the predictions of general relativity and observational data, but physicists have chosen to postulate 'dark matter' and, more recently, 'dark energy', to explain these discrepancies, rather than resort to tinkering with general relativity.

The motion of stars as they orbit a spiral galaxy is not correctly accounted for by general relativity; moving outwards from the centre of a spiral galaxy, the acceleration experienced by the stars in their orbital trajectories decreases, and this decrease follows predictions until the acceleration drops below a certain critical threshold, at which point the decrease in acceleration with radial distance is not as rapid as it should be. The extra acceleration is of the order 1/10^8 cm per second per second. Astrophysicists and cosmologists have postulated the existence of dark matter in order to explain this extra acceleration without having to change their theory of gravity.

Intriguingly, the acceleration experienced by the Pioneer 10 and Pioneer 11 space-probes as they reach the edge of our solar system also fails to agree with theoretical predictions. There appears to be an extra acceleration towards the Sun of the order 1/10^8 cm per second per second. Dark matter cannot be invoked to explain this discrepancy, so this phenomenon remains unexplained.

Yet cosmologists continue to assume that dark matter exists, and in 2005 the results of the 'Millennium Simulation' were triumphantly announced. This was a super-computer simulation of the formation and evolution of galactic structure, which assumed a dark matter model, and, by simulating a cube of space 2.230 billion light-years on a side, was able to obtain a final distribution of matter which agreed with current observations.

In a nice paper this week, Stéphanie Ruphy highlights the assumptions which went into this simulation, (philsci-archive.pitt.edu/archive/00003123/01/Simulation_Phil-Archi.doc):

Quite obviously, a basic presupposition in this model is that there is such a thing as dark matter, that is, some unknown, exotic form of matter which is not seen but which is supposed to dominate the dynamics of the universe. Postulating dark matter is an answer to some puzzling observations of galactic dynamics. But there are alternative interpretations of these observations. Here again, it is worth quoting what cosmologists themselves have to say about dark matter:

“Many attempts have been made to identify its nature, […] but what it is composed of is still unknown. Laboratory searches are under way to try to detect this matter, so far without success. A key question is whether its apparent existence is due to our using the wrong theory of gravity on these scales. This is under investigation, with various proposals for modified forms of the gravitational equations that might explain the observations without the presence of large quantities of dark matter.” (George Ellis, 2006)

And the same goes for the so-called “dark energy” which is another key ingredient of recent cosmological models. There are other ways to interpret observations (in that case observations of very distant supernovae) than to postulate the existence of a form of energy, which we know nothing about, except that its effect would be to accelerate the expansion of the universe.

...

For in light of what has been said on the existence of alternative sub-models, there is no good grounds to claim that the path actually taken by modelers is the only path leading to a plausible (in the foregoing sense) simulated universe. And note that if the Millennium Run does not have (yet) serious competitors, it is not because alternative paths have been also fully developed and dismissed on empirical grounds. Rather, if only because of the cost in terms of material and intellectual resources of developing alternative simulations built with different modeling ingredients, only one path has been taken to its end, that is, to a level of details and to a scale large enough to allow significant comparison with observations. There is thus no good grounds to exclude that, had the cosmologists the resources to fully develop alternative paths, they would come up with different, but equally plausible representations of the evolution of the universe.

A paper published this week in 'Nature' claims to have mapped the distribution of dark matter in a small region of the sky. The map was produced by analysing the deviation and gravitational lensing of light from background galaxies which cannot be explained by the normal matter in foreground galaxies (under a conventional theory of gravity):

news.bbc.co.uk/1/hi/sci/tech/6235751.stm

The article on the BBC website states that:

For astronomers, the challenge of mapping the Universe [using the distribution of normal, luminous matter] has been described as similar to mapping a city from night-time aerial snapshots showing only street lights.

Dark matter is invisible, so only the luminous galaxies can be seen directly. The new images are equivalent to seeing a city, its suburbs and country roads in daylight for the first time.

This, of course, is completely wrong. Inferring the existence of dark matter from the trajectories of the light coming from background sources, is analogous to mapping a city from night-time aerial shots of the motion of cars through the city; you still have to make an assumption about the relationship between the trajectories of the cars and distribution of buildings and people. In the astronomical case, the deviations to light which cannot be explained by the normal, luminous matter under a conventional theory of gravity, could well be explained by the normal, luminous matter under a different theory of gravity.

The motion of stars as they orbit a spiral galaxy is not correctly accounted for by general relativity; moving outwards from the centre of a spiral galaxy, the acceleration experienced by the stars in their orbital trajectories decreases, and this decrease follows predictions until the acceleration drops below a certain critical threshold, at which point the decrease in acceleration with radial distance is not as rapid as it should be. The extra acceleration is of the order 1/10^8 cm per second per second. Astrophysicists and cosmologists have postulated the existence of dark matter in order to explain this extra acceleration without having to change their theory of gravity.

Intriguingly, the acceleration experienced by the Pioneer 10 and Pioneer 11 space-probes as they reach the edge of our solar system also fails to agree with theoretical predictions. There appears to be an extra acceleration towards the Sun of the order 1/10^8 cm per second per second. Dark matter cannot be invoked to explain this discrepancy, so this phenomenon remains unexplained.

Yet cosmologists continue to assume that dark matter exists, and in 2005 the results of the 'Millennium Simulation' were triumphantly announced. This was a super-computer simulation of the formation and evolution of galactic structure, which assumed a dark matter model, and, by simulating a cube of space 2.230 billion light-years on a side, was able to obtain a final distribution of matter which agreed with current observations.

In a nice paper this week, Stéphanie Ruphy highlights the assumptions which went into this simulation, (philsci-archive.pitt.edu/archive/00003123/01/Simulation_Phil-Archi.doc):

Quite obviously, a basic presupposition in this model is that there is such a thing as dark matter, that is, some unknown, exotic form of matter which is not seen but which is supposed to dominate the dynamics of the universe. Postulating dark matter is an answer to some puzzling observations of galactic dynamics. But there are alternative interpretations of these observations. Here again, it is worth quoting what cosmologists themselves have to say about dark matter:

“Many attempts have been made to identify its nature, […] but what it is composed of is still unknown. Laboratory searches are under way to try to detect this matter, so far without success. A key question is whether its apparent existence is due to our using the wrong theory of gravity on these scales. This is under investigation, with various proposals for modified forms of the gravitational equations that might explain the observations without the presence of large quantities of dark matter.” (George Ellis, 2006)

And the same goes for the so-called “dark energy” which is another key ingredient of recent cosmological models. There are other ways to interpret observations (in that case observations of very distant supernovae) than to postulate the existence of a form of energy, which we know nothing about, except that its effect would be to accelerate the expansion of the universe.

...

For in light of what has been said on the existence of alternative sub-models, there is no good grounds to claim that the path actually taken by modelers is the only path leading to a plausible (in the foregoing sense) simulated universe. And note that if the Millennium Run does not have (yet) serious competitors, it is not because alternative paths have been also fully developed and dismissed on empirical grounds. Rather, if only because of the cost in terms of material and intellectual resources of developing alternative simulations built with different modeling ingredients, only one path has been taken to its end, that is, to a level of details and to a scale large enough to allow significant comparison with observations. There is thus no good grounds to exclude that, had the cosmologists the resources to fully develop alternative paths, they would come up with different, but equally plausible representations of the evolution of the universe.

A paper published this week in 'Nature' claims to have mapped the distribution of dark matter in a small region of the sky. The map was produced by analysing the deviation and gravitational lensing of light from background galaxies which cannot be explained by the normal matter in foreground galaxies (under a conventional theory of gravity):

news.bbc.co.uk/1/hi/sci/tech/6235751.stm

The article on the BBC website states that:

For astronomers, the challenge of mapping the Universe [using the distribution of normal, luminous matter] has been described as similar to mapping a city from night-time aerial snapshots showing only street lights.

Dark matter is invisible, so only the luminous galaxies can be seen directly. The new images are equivalent to seeing a city, its suburbs and country roads in daylight for the first time.

This, of course, is completely wrong. Inferring the existence of dark matter from the trajectories of the light coming from background sources, is analogous to mapping a city from night-time aerial shots of the motion of cars through the city; you still have to make an assumption about the relationship between the trajectories of the cars and distribution of buildings and people. In the astronomical case, the deviations to light which cannot be explained by the normal, luminous matter under a conventional theory of gravity, could well be explained by the normal, luminous matter under a different theory of gravity.

Thursday, January 11, 2007

Creating a universe - Day 4

It's becoming slightly tricky to keep track of all the details in my universe, so I have decided to employ UML (Unified Modelling Language) to represent my design. Given that I am designing a 'system of systems', I have also decided to draw upon the powerful intellectual resources of Systems Engineering. I will duly represent the people in my universe as 'stakeholders' in the system of systems.

However, I may have been a trifle over-ambitious with the number of people I've decided to include in my universe. In fact, to make sure I don't forget about anyone, I've decided to store the specifications and life-histories of all my created people in an Excel spreadsheet.

I have decided to use the Poincare dodecahedral space for the large-scale topology of my space-time (see the attached diagram). This, of course, will give my universe a finite spatial volume. If the observers in my universe are able to look far enough, then they will perceive multiple copies of the same region of space. In effect, they will see the backs of their own heads.

Regarding the force fields in my universe, I have decided to use the strong and electroweak forces from our own universe, but to add an extra field of my own. The gauge group of my field is the Monster group. This is a finite group containing 808017424794512875886459904961710757005754368000000000 elements. It is a 'simple' group, in the technical sense that it contains no normal subgroups. The physical implication of this is that it corresponds to a single coupling constant. This extra gauge field will be tuned to add many unusual structures to my universe, far beyond those supported by the chemistry and nuclear physics of our own.

I am already collimating and calibrating the lasers in my table-top particle accelerator, in preparation for the creation event this weekend. I shall keep you posted.

Universe creation

However, I may have been a trifle over-ambitious with the number of people I've decided to include in my universe. In fact, to make sure I don't forget about anyone, I've decided to store the specifications and life-histories of all my created people in an Excel spreadsheet.

I have decided to use the Poincare dodecahedral space for the large-scale topology of my space-time (see the attached diagram). This, of course, will give my universe a finite spatial volume. If the observers in my universe are able to look far enough, then they will perceive multiple copies of the same region of space. In effect, they will see the backs of their own heads.

Regarding the force fields in my universe, I have decided to use the strong and electroweak forces from our own universe, but to add an extra field of my own. The gauge group of my field is the Monster group. This is a finite group containing 808017424794512875886459904961710757005754368000000000 elements. It is a 'simple' group, in the technical sense that it contains no normal subgroups. The physical implication of this is that it corresponds to a single coupling constant. This extra gauge field will be tuned to add many unusual structures to my universe, far beyond those supported by the chemistry and nuclear physics of our own.

I am already collimating and calibrating the lasers in my table-top particle accelerator, in preparation for the creation event this weekend. I shall keep you posted.

Universe creation

Wednesday, January 10, 2007

Creating a universe - Day 3

By reverse engineering our own universe, I have been able to establish the following requirements for designing the physics of a universe:

Universe creation

- Specify a space-time.

- Specify a set of gauge fields.

- Specify a set of elementary particles, and partition them into a finite number of generations.

- Specify the strengths of the gauge fields.

- Specify the couplings between the gauge fields and the elementary particles.

- Specify the direct ('Yukawa') couplings between elementary particles.

- Specify the 'mixing' between the elementary particles in different generations.

- Specify cosmological parameters corresponding to the initial conditions for the universe.

This list is based upon the understanding gleaned from general relativistic cosmology and the standard model of particle physics, the latter being an application of quantum field theory. Both these theories are empirically verified. I do not intend to consider how one might define a physical universe according to speculative theories such as string theory or supersymmetry.

Our own physical universe is specified as follows:

- Space-time is a 4-dimensional pseudo-Riemannian manifold with three spatial dimensions and one time dimension.

- There are three force fields: the strong nuclear force, the weak nuclear force, and the electromagnetic force. The weak and electromagnetic fields are unified in the electroweak gauge field.

- The elementary particles consist of quarks, leptons and gauge bosons. Elementary particles are divided into fermions and bosons according to the value they possess of a property called 'intrinsic spin'. If a particle possesses a non-integral value of intrinsic spin, it is referred to as a fermion, whilst if it possesses an integral value, it is referred to as a boson. The particles of the elementary matter fields are fermions and the interaction carriers of the gauge force fields are bosons. The elementary fermions come in two types: leptons and quarks. Whilst quarks interact via both the strong and electroweak forces, leptons interact via the electroweak force only. There are three generations of elementary fermions in our universe.

- The strength of each gauge field is specified by what physicists call the 'coupling constants' of the gauge field.

- To specify the couplings between the gauge fields and the elementary particles, the values of the 'charges' possessed by elementary particles are specified. For example, in the case of couplings between particles and the electromagnetic force, the strength of the coupling is determined by the electromagnetic charge of the particle.

- Our universe appears to be subject to spontaneous symmetry breaking caused by Higgs fields, in which case the values of coefficients in the Yukawa matrices specify the direct interactions between the Higgs bosons and the elementary fermions.

- The mixing between the quarks in different generations is specified by the Cabibbo-Kobayashi-Maskawa (CKM) matrix. If, as current evidence indicates, the neutrinos possess mass, then there is a corresponding notion of lepton mixing, and the CKM matrix has a lepton counterpart called the Maki-Nakagawa-Sakata matrix.

- Our universe is taken to be spatially homogeneous, and to specify cosmological parameters corresponding to the initial conditions for such a universe, the global symmetry group (or Killing Lie algebra) of the homogeneous 3-dimensional space is specified, along with the dynamical parameters which determine the time evolution of the 3-dimensional geometry, such as the Hubble parameter, and the density parameter, Omega.

Universe creation

Tuesday, January 09, 2007

Creating a universe - Day 2

I am making excellent progress. I have already made some crucial decisions about the things I wish to include and the things I wish to exclude from my universe. My universe will be rich and varied, with a cascading hierarchy of structural levels, rich enough to include fractal structures, crystalline structures, and dendritic structures. I will use discrete and continuous structures, and I will employ evolution processes both deterministic and stochastic. I will draw upon a mathematical palette which includes the abstract structures provided by Hilbert spaces, Lie groups, differential manifolds, and fibre bundles. My universe will, however, be inconsistent with the existence of Coldplay.

I am making excellent progress. I have already made some crucial decisions about the things I wish to include and the things I wish to exclude from my universe. My universe will be rich and varied, with a cascading hierarchy of structural levels, rich enough to include fractal structures, crystalline structures, and dendritic structures. I will use discrete and continuous structures, and I will employ evolution processes both deterministic and stochastic. I will draw upon a mathematical palette which includes the abstract structures provided by Hilbert spaces, Lie groups, differential manifolds, and fibre bundles. My universe will, however, be inconsistent with the existence of Coldplay.

I wish to re-create a number of the things we find in our own universe. In particular, I would like to produce some variations upon the landscape themes already installed in the Colorado exhibition, as seen in the photos which accompany this post. I like the existence of structure on many different length scales here; the excellent use of both foreground and background objects; and the fabulously subtle varieties of colour and texture.

I do not, of course, have a blank canvas to work with, for my universe will be created from a magnetic monopole in our own universe, and will therefore basically be made of the same stuff from which our own universe is made. I will therefore need to engage in a certain amount of reverse engineering on our own universe. This, however, is something on which I will elaborate over the days to come.

Universe creation

Creating a universe - Day 1

Over the weekend I was fortunate enough to purchase a magnetic monopole from ebay. Darker than blackness, it is now hovering, menacingly, in the corner of my living room. I have also just taken delivery of a table-top particle accelerator from dabs.com. Developed by French physicists at the Institute of Technology in Palaiseau, www.newscientisttech.com/channel/tech/dn10741-tabletop-particle-accelerator-created.html, this will enable me to bombard my magnetic monopole with energetic electrons. According to the theoretical model published last year by Nobuyuki Sakai et al, bombarding a magnetic monopole in a particle accelerator should induce the monopole to undergo inflationary expansion, creating a child universe inside the horizon of the small Reissner-Nordstrom black-hole space-time which contains the monopole. Crucially, the child universe will be created without the need for an initial singularity 'in the laboratory'. (See www.ex-christian.net/index.php?showtopic=10297 for the New Scientist article 'Create your own universe', and www.arxiv.org/PS_cache/gr-qc/pdf/0602/0602084.pdf for the academic paper).

So, over the next week, I shall be designing my universe, and fine-tuning my particle accelerator for the creation event. It will probably take me, oh, seven days before I'm ready for the grand event. I want to get my design right, because once the child universe has been created, it will disconnect from our own parent universe, the small black-hole in our universe will evaporate, and I will no longer be able to interact with my design. This is one project, therefore, which will definitely not be a triumph of development over design.

I shall let you know how my design is proceeding, and, of course, I may incorporate suggestions from others, if anyone would like to proffer some.

Universe creation

So, over the next week, I shall be designing my universe, and fine-tuning my particle accelerator for the creation event. It will probably take me, oh, seven days before I'm ready for the grand event. I want to get my design right, because once the child universe has been created, it will disconnect from our own parent universe, the small black-hole in our universe will evaporate, and I will no longer be able to interact with my design. This is one project, therefore, which will definitely not be a triumph of development over design.

I shall let you know how my design is proceeding, and, of course, I may incorporate suggestions from others, if anyone would like to proffer some.

Universe creation

Monday, January 08, 2007

Gordon Bennett!

James Gordon Bennett Jr is generally held to be the first international playboy. However, in addition to indulging his opulent lifestyle around Newport, Rhode Island, Bennett also inherited control of the New York Herald from his father, subsidised Stanley's expedition to Africa to find Livingstone, and established the Gordon Bennett Cup for automobiles. This was an international competition which ran from 1900 to 1905. Each nation, however, could only enter three cars, and this peeved the French, who felt that their superiority in these matters was therefore under-represented. The French won the 1905 Gordon Bennett Cup, and because each year's winner was entitled to host the following year's event, the French refused to host the 1906 Cup, and instead ran the first ever Grand Prix...with 26 French cars, six Italian cars, and two from Germany.

Anyway, I've always been much impressed by the manner in which the origin of the expostulation 'Gordon Bennett' appeared to lie with this particular Gordon Bennett. According to Wikipedia, "in 1877 Gordon Bennett left New York after a scandal that ended his engagement to socialite Caroline May. According to various accounts, he arrived late and drunk to a party at the May family mansion, then urinated into a fireplace in full view of his hosts," (en.wikipedia.org/wiki/James_Gordon_Bennett,_Jr. and note that the full-stop is part of the URL). Sadly, the real provenance of the phrase may not be so colourful. According to an article on the BBC website, John Simpson, chief editor of the Oxford English Dictionary, claims that "The expression is probably just a euphemistic extension of 'God!' or 'Gawd!', turned into a proper name to weaken the swear-word," (news.bbc.co.uk/1/hi/magazine/6226293.stm). Even worse, there are suggestions below the main article that the phrase originates with Lieutenant-General Henry Gordon Bennett "who abandoned his command and fled to safety during the Japanese invasion of Singapore leaving his unfortunate troops behind to be captured."

I prefer the pissing-in-the-fireplace explanation, and I propose that in years to come the expostulation 'Gordon McCabe!' will be used as a synonym for pissing into the wind.

Anyway, I've always been much impressed by the manner in which the origin of the expostulation 'Gordon Bennett' appeared to lie with this particular Gordon Bennett. According to Wikipedia, "in 1877 Gordon Bennett left New York after a scandal that ended his engagement to socialite Caroline May. According to various accounts, he arrived late and drunk to a party at the May family mansion, then urinated into a fireplace in full view of his hosts," (en.wikipedia.org/wiki/James_Gordon_Bennett,_Jr. and note that the full-stop is part of the URL). Sadly, the real provenance of the phrase may not be so colourful. According to an article on the BBC website, John Simpson, chief editor of the Oxford English Dictionary, claims that "The expression is probably just a euphemistic extension of 'God!' or 'Gawd!', turned into a proper name to weaken the swear-word," (news.bbc.co.uk/1/hi/magazine/6226293.stm). Even worse, there are suggestions below the main article that the phrase originates with Lieutenant-General Henry Gordon Bennett "who abandoned his command and fled to safety during the Japanese invasion of Singapore leaving his unfortunate troops behind to be captured."

I prefer the pissing-in-the-fireplace explanation, and I propose that in years to come the expostulation 'Gordon McCabe!' will be used as a synonym for pissing into the wind.

Friday, January 05, 2007

Radical politics

Ever since Tony Blair slid into power, politicians in the UK have been fond of claiming to be 'radical', whilst actually advocating and implementing rather conventional economic policies. So, as an antidote, here's a genuinely radical proposal I heard a few years back:

0% income tax, 0% expenditure tax, and 100% inheritance tax.

Nobody would pay tax during their lifetime, but their assets and capital would be taken by the government when they die. What would an economy such as this look like? Well, with 0% income tax people would have a greater incentive to work harder, so the economy would be larger. 100% inheritance tax would entail that a much greater proportion of the GDP would be taken in the form of taxation, so the government would be able to afford health and education services of an extremely high standard. Some people might try to liquify all their assets and spend all their money before they die, but unless one's time of death can be reliably predicted, this is a difficult game to play. It might also be argued that people would resist 100% inheritance tax, but when the average lifetime enters the 80s, it means that people will generally die when their offspring are in their 50s, and therefore, in most cases, established with a family, house and career.

Just a thought for Mr Cameron!

0% income tax, 0% expenditure tax, and 100% inheritance tax.

Nobody would pay tax during their lifetime, but their assets and capital would be taken by the government when they die. What would an economy such as this look like? Well, with 0% income tax people would have a greater incentive to work harder, so the economy would be larger. 100% inheritance tax would entail that a much greater proportion of the GDP would be taken in the form of taxation, so the government would be able to afford health and education services of an extremely high standard. Some people might try to liquify all their assets and spend all their money before they die, but unless one's time of death can be reliably predicted, this is a difficult game to play. It might also be argued that people would resist 100% inheritance tax, but when the average lifetime enters the 80s, it means that people will generally die when their offspring are in their 50s, and therefore, in most cases, established with a family, house and career.

Just a thought for Mr Cameron!

Wednesday, January 03, 2007

Breakthrough of the Year

It is often asked whether pure mathematics is discovered or invented. I think the answer is both. Pure mathematics is an exploration of the operating space of the human mind, and because products of the human mind are considered to be inventions, pure mathematics is both invented and discovered. There is no need to postulate a 'Platonic realm' of timeless mathematical forms to accept that pure mathematics is discovered, but pure mathematical discovery is not the discovery of something which exists independently of the human mind. How are we to explain the effectiveness of mathematics in the natural sciences? Well, the mind supervenes upon the brain, and the brain is the outcome of evolution by natural selection, hence the operating space of the human mind has been shaped by the structure of the natural world. Appropriately enough, the December 2006 issue of the journal Science declared the 'breakthough of the year' to be Grigori Perelman's proof of the Poincare conjecture. www.sciencemag.org/cgi/content/full/314/5807/1848

This famous conjecture asserts that the 3-dimensional sphere is the only simply connected, compact, 3-dimensional manifold-without-boundary. In simple terms, a compact manifold is one which will always be of finite volume, irrespective of which geometry you place upon it. A simply connected manifold, in simple terms, is one which has no holes passing through it (when that manifold is embedded in a Euclidean space of higher dimension). A sphere in any dimension is simply connected, but the 2-dimensional surfaces of doughnuts and pretzels, for example, clearly have holes passing through them, hence they are non-simply connected. As another example, there is a 3-dimensional version of the doughnut, called the 3-torus, which is compact, but non-simply connected.

Perelman appears to have proved the Poincare conjecture, and a more general statement called the Thurston geometrization conjecture, in three papers which were submitted, not to an academic journal, but to the arXiv electronic preprint repository, between November 2002 and July 2003. In the three years since, other mathematicians have been attempting to verify Perelman's claims, and once a consensus was reached, this culminated in Perelman being awarded the Fields Medal this year.

Intriguingly, Perelman has refused to accept the award. Since producing his proof, Perelman has abandoned his academic post, and is reportedly jobless, and living with his mother in St Petersburg. Perelman appears to be disgusted by a lack of ethics in the mathematics community, and, in particular, by claims in 2006 that two Chinese mathematicians, Cao and Zhu, had found the first complete proof. According to the article in Science, two other mathematicians, Kleiner and Lott, who attempted to expound and elaborate Perelman's proof, complained that Cao and Zhu had copied a proof of theirs and claimed it as original, and "the latter pair grudgingly printed an erratum acknowledging Kleiner and Lott's priority. This fall, the American Mathematical Society attempted to organize an all-star panel on the Poincaré and geometrization conjectures at its January 2007 meeting in New Orleans, Louisiana. According to Executive Director John Ewing, the effort fell apart when Lott refused to share the stage with Zhu."